Prioritization is a complex problem to solve.

⏫ When we want to prioritize the next features to deliver, or the next initiatives to run, we have so many details to analyze. So many data points are involved.

👥 We want to ensure that we are helping our users to solve their problems efficiently. We want to be sure that we are providing a smooth and astonishing experience to our customers.

🎯 And at the same time, we want to ensure that we are supporting our business, that we are bringing enough revenues to ensure that we can continue to run our product for multiple years.

So when we start discussing priorities, everyone has their own ideas. Everyone thinks to know exactly where we should go next, with very strong beliefs. I am 100% sure I am speaking to your heart here. I am sure you’re experiencing this as well.

If we are working in a company that is operating in a market with a lot of uncertainty, it’s even harder. Starting a brand new product, like most startups are doing, makes this task even more complicated.

The problem when prioritizing initiatives

The fear that we are experiencing when prioritizing is mainly due to the confusion created by all the details that we are humanly unable to manage. It’s simply too much!

And we are all feeling it. We are feeling lost in front of all the possibilities, all the data. And humans’ egos hate to feel lost. And when the egos jump in, the conflicts start.

And we spend more time defining what “best” means than actually taking action and moving forward.

All these details lead to confusion: no one is able to be sure, and most of us feel lost in front of all the possibilities.

At the end of the day, everyone is frustrated, and we end up with poor prioritization, pushed only by the loudest persons around the table.

While we are fighting to decide who is right, and who’s not, we tend to forget the most important: serving our users!

Prioritizing initiatives with RICE Scoring

⏫ Calculating the RICE Score is the best solution we ever used.

We applied it within large companies, as well as smaller startups. We run it for highly uncertain topics as well as more controlled situations.

It is pretty simple, so let’s see how to use it.

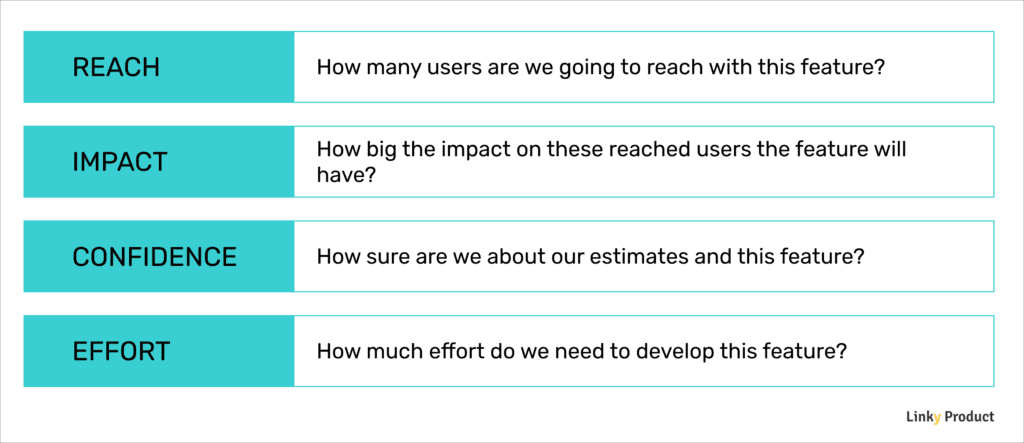

The RICE Score is composed of 4 components: Reach, Impact, Confidence and Efforts.

RICE Score - R is for Reach

👥 Reach is the number of users that are going to use this feature. It measures how widely the initiative will spread through our userbase. It also gives us a sense of the risk that we are taking.

We must select the more realistic number possible.

The benefit of using this criterion is to take into account the number of users that we are going to impact. If we have two new features, one reaching 2 000 and the second 4 000, there is a good chance that the second has a higher priority.

- We have 10, 000 users

- We have the initiative to improve a feature currently used by 2,000 of these users. The goal is to have 1, 000 new users use this feature.

- The Reach of this initiative would be 3,000 users – 2,000 regular users + 1,000 new users.

RICE Score - I is for Impact

The benefit of integrating this criterion is that it will prioritize features that are envisioned to deliver more value to our users.

It’s based on a pretty simple scale:

- 3 = massive impact

- 2 = high impact

- 1 = medium impact

- 0.5 = low impact

- 0.25 = minimal impact

RICE Score - C is for Confidence

👍 Confidence is the trickier to explain, but probably the one that has the strongest value. It’s all about answering the question “How confident are you about the numbers that we are providing here?”.

The benefit of this criterion is definitely to acknowledge the gut feeling we are all having. We are putting a number on our feeling!

It’s a percentage, representing our Confidence level on the ranking that we are currently providing. How sure are we that the Reach is correct? How sure are we that the Impact is correct?

Here are some details that are impacting the Confidence level:

- Do we have user interviews validating that the feature is effectively solving a problem? If we do, the Confidence level is probably high!

- Do we have data to support our decisions on this feature? If we do, the Confidence level is probably higher as well.

- Is this feature coming only from internal discussions? The Confidence level should probably suffer a low Confidence level.

💡 One piece of personal advice here: this criterion often triggers discussion, as it can be hard to understand the first time we see it. It’s important to take time to explain it, and at some point to try anyway. The next prioritization sessions will be way easier, it’s just a matter to be used to it 😉

RICE Score - E is for Effort

Finally, probably the easiest to explain: Effort 💼. It’s all about estimating, very high-level, how much time do we need to develop this feature.

Integrating Effort into the prioritization process has the benefits of ensuring that costs consideration is part of our decisions.

With the same idea as Reach and Impact, between a feature that will take 2 months or 6 months to develop, we all want to develop the less expansive feature 🙂

So when estimating Effort, we are looking to answer this question: “How many months of my current team do I need to develop this feature?”.

So if the answer is “3 months”, the “E” score will be 3.

The RICE Score formula

The RICE Score is simple to calculate: (R * I * C) / E

The higher the Score, the higher the Priority!

Using the RICE Score has multiple incredible benefits:

- We are comparing every initiative against the exact same scoring method.

- Confidence is acknowledged! If one feature has a better chance than another, it will have a strong bonus thanks to the Confidence criterion.

💬 Here is an example:

R=500, I=2, C=55%, E=2 // Score = 275

R=375, I=2, C=85%, E=2 // Score = 318See?

Even if initiative #2 will reach fewer users, the Confidence being higher means that it’s a higher priority from a Product standpoint.

We are reducing the risk and having a better chance to build a successful feature.

If we really want to test initiative #1, we can raise the confidence level. Do we have more data? Can we interview users before building the feature? This might change everything 😉

🤝 At Linky Product, we are regularly implementing the RICE Score with our clients and teams.

We generate insightful discussions about the initiatives and the confidence level of our decisions.

Another benefit of the RICE Score for our clients is that everyone is agreeing with the decisions that are being made – we can quickly explain why we are prioritizing some initiatives and not some others. Frustrations are way lower as discussions are based on tangible numbers, instead of opinions and ego.

If you want to see an example of RICE Score in action, you can take a look at our Product Strategy Examples.